BI solution for case study coverage analysis

Itransition created case study and expertise scoring systems together with intuitive dashboards for fact-based analysis of expertise coverage and discovery of improvement points. This led to a 19% increase in leads and 31% more leads becoming opportunities.

Table of contents

Context

Itransition is a global company with 3,000+ employees and 1,500+ completed projects in dozens of industries for 800+ clients in 40+ countries. The company has expertise in many subject areas and develops scores of complex solutions using various technologies.

As a multi-vector service company, Itransition relies on case studies to back up our experience for existing and new clients. With thousands of projects, it is challenging to understand which domain is supported by case studies and which needs more work.

We have created a project card system to track multiple projects, where each new project receives a dedicated project card in the company’s Jira. Itransition’s marketing department assesses these project cards to plan case study creation and cover the most sought-after industries, technologies, and solutions. To assess case study coverage, marketing also relies on Google Analytics, social surveys, and meetings with the production department and competency center subject matter experts (SMEs). Marketing also constantly analyzes the use of case studies, by whom and how they are used, what promising projects are emerging, what popular technologies are uncovered, and what case studies need to be updated or archived.

Over time, the number of existing and new projects and emerging technologies uncovered by case studies grew. The human factor also prevented the marketing department from getting a clear picture of case study coverage. We decided we needed to automate and optimize case study analysis using data-driven dynamic dashboards with report functionality beyond basic features.

For this case, Itransition collaborated with our BI competency center which has completed a number of projects, including migrating an automotive BI system to the cloud, developing smart data-driven ad campaigns, building scalable BI portals, and creating analytical reports for visualizing heterogeneous data.

Solution

Itransition’s BI team created a solution consisting of a case study scoring system, an expertise scoring system, and dynamic dashboards for users and admins. Users are our CTO, heads of departments and centers of competence, and the admin is the Head of Marketing.

Case study scoring system

Cases get scored based on the following criteria:

- Client name presence

- Date of publication

- Reference letter presence

The case study formula is Case study score = 60% x NDA score + 30% x Version date score + 10% x Reference score.

The BI team has derived this formula by giving different weights to the case study success criteria. Since the presence of client names is important for sharing our expertise without being bound by NDAs, the NDA score has a 60% weight in the case study score.

The date of publication was given a 30% weight because case studies up-to-date with the current market, technologies, and IT trends have more value than outdated ones. That’s why we pay attention to how current the case study is when calculating the case score.

Lastly, cases backed up by references are scored higher and given an additional 10% weight, since references are proof of our expertise and great interpersonal relationships built during projects. So, the newest cases featuring the client and accompanied by reference letters will receive the highest score.

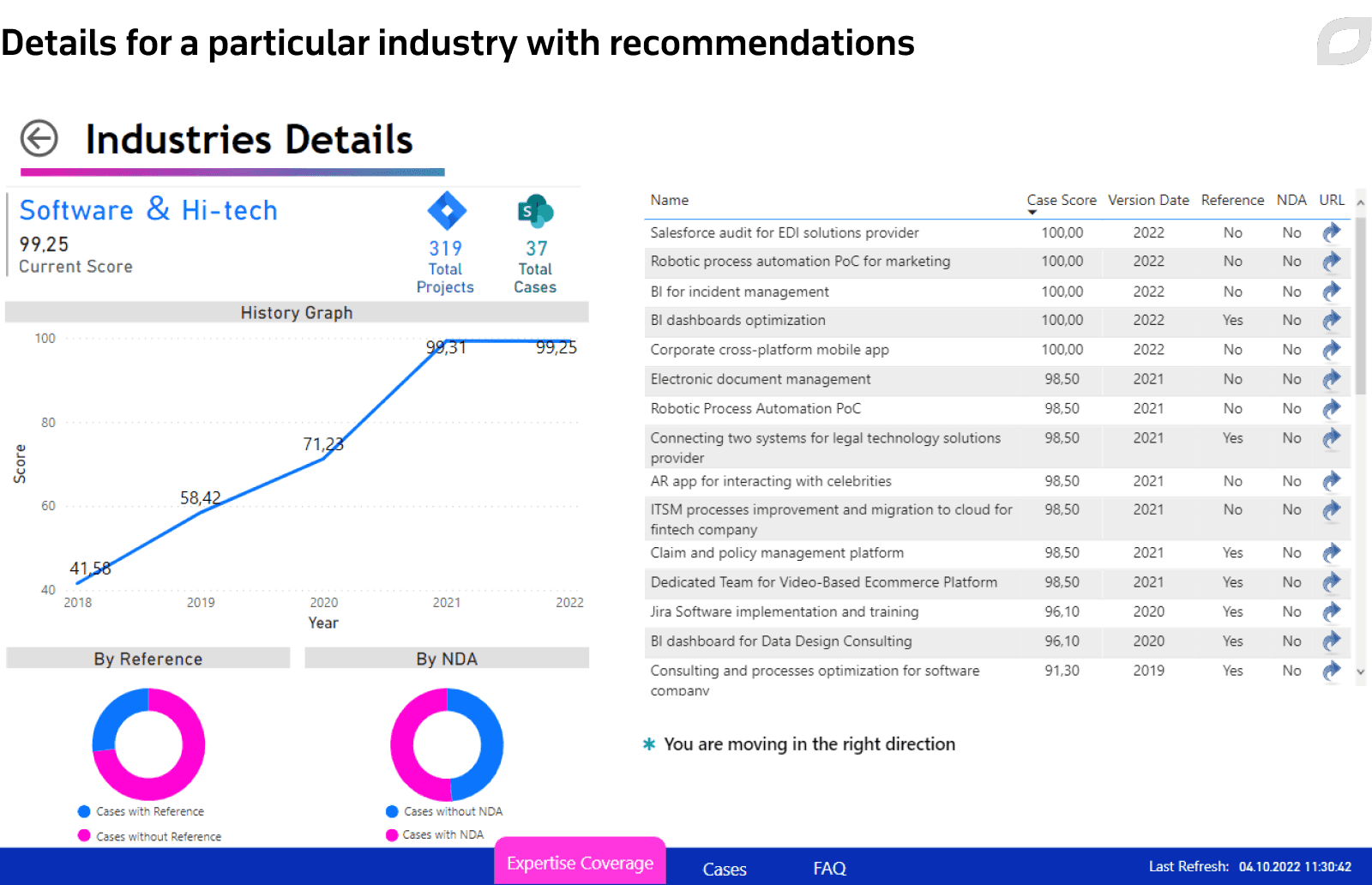

Expertise scoring system

As the industry is a more generic factor, it is given a higher requirement for the number of case studies. Industry scores are calculated as the average of the top ten case studies’ scores:

Industry score = Average(Top 10 case studies score)

Technologies and solutions are more specific aspects and have more lenient requirements for the number of case studies. Technology and solution scores are calculated as the average of the top five case studies’ scores:

Technology score = Average(Top 5 case studies score) Solution score = Average(Top 5 case studies score)

Dynamic dashboards

The dashboards demonstrate the current state of the company’s expertise coverage by case studies, facilitating data-driven decision-making.

User dashboards

The dashboard consists of three tabs: expertise coverage, case analysis, and a FAQ section explaining how to use the dashboard.

Expertise coverage by industry, technology, and solution gives users a full picture of the project and case coverage, specifying categories with good and bad coverage as well as the number of cases in each category. The tab includes:

- Search by a certain category, sorting expertise areas in a particular order, cross-filtering, and a top cases list

- Details about existing expertise, recommendations for heads of departments responsible for the expertise, a project list in Jira and cases list in the marketing materials library, and a historical chart of the industry score

- Navigation tooltips

The dashboard highlights green areas where Itransition’s expertise is extensively covered by projects and cases. For yellow and red areas, the responsible parties need to create new cases and update existing ones to prove relevant experience.

Users can sort the order of cases in the industry, technologies, and solutions tables by project count, case count, and case score. Another option is to view them in alphabetical order.

To make searches easier, BI developers created a cross-filtering feature. When you select a specific technology, it will only show cases featuring industries and solutions featuring that technology. For example, if you tap Azure in the Technology column, the industries on the right and solutions on the left will only contain Azure cases.

Additional functionality developed includes:

- Pop-ups showing the top 10 cases with their title and score

- Similar pop-ups for technologies and solutions with the top 5 cases

- Information icons with tooltips explaining how to use different features

- Industry, technology, or solution details with relevant recommendations to update existing cases, create brand-named cases, and get more references from clients

- Quick access to cases in the marketing materials library and project card lists in Jira

- Tooltips attached to tables with detailed information about the case score

For users’ convenience, developers added a quick access feature that instantly opens a chosen industry list of cases and project cards in the marketing materials library and in Jira.

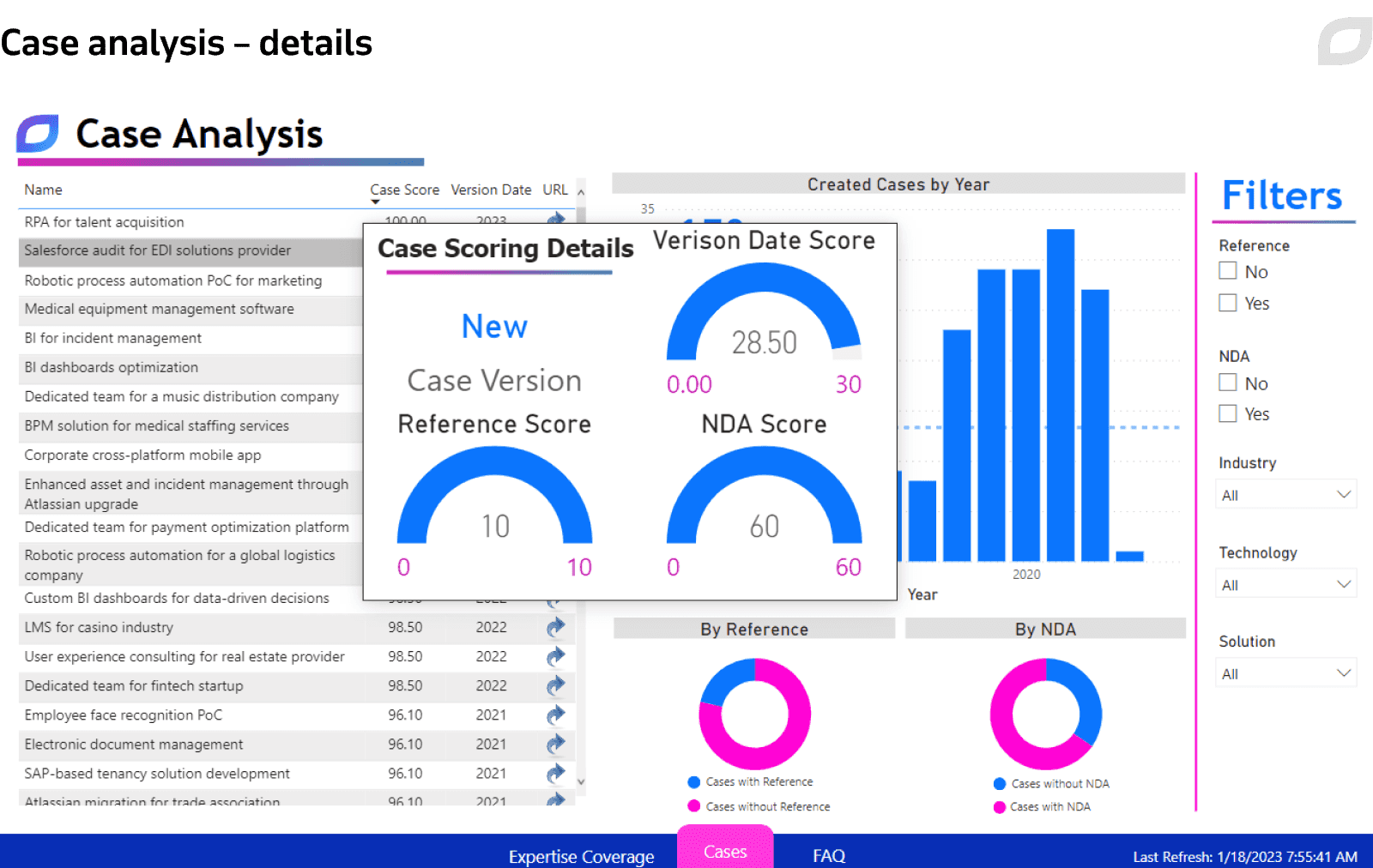

Cases

The case analysis tab features metrics such as case scores, case URLs, and details. It also enables users to access the case folder in the marketing materials library and zoom in on case details. The case analysis includes details by release date, reference, and NDA, as well as filters and URLs of cases in the library of marketing materials.

Details

The case analysis details panel shows the case score values for selected cases from the table.

Filters

Users can filter the cases list by selecting a value in the panel based on the presence of references and NDAs or search by a particular industry, solution, or technology.

URL

On the case analysis page, the table contains a URL column with a link to a folder in the marketing materials library for quick access to the case study.

Tooltips

Tooltips attached to tables with detailed information about the case score show the top cases in a particular industry, technology, or solution.

Project card lists available via the board allow us to see what ongoing projects we can use to fully cover a certain area of expertise. This also helps analyze our capacity for case creation.

Process

Based on the input provided by the process SMEs, we created a specifications document describing the business process. The process owner reviews it and confirms the accuracy and completion of the steps, context, impact, and a complete set of process exceptions. We also created a channel for bug reporting, sorting, and fixing. We relied on the marketing department for support in data cleaning.

We followed a classic Scrum workflow with sprint planning sessions where we discussed sprint goals and deadlines, daily scrums to schedule tasks for the day, and sprint reviews to go through results and achievements. After every sprint, we demoed presentations of finished iterations for the client and held retrospective meetings.

After kick-off and team introduction, the BI developer sketched mockups of the future solution with different design options and created a PoC using the approved features.

Since heterogeneous data came from multiple sources and was manually entered into the marketing materials library, we needed to make sure human error wouldn’t skew our results. The library was analyzed for metadata inconsistencies that were fixed right away.

The team also interviewed focus groups with different functionality stories. We chose focus groups based on their roles in the company: heads of departments and competency centers, the Head of Sales and our Account Management program, and our CTO. The heads of departments and centers were chosen because they could give us insight into the data they needed to gauge the situation within their departments and centers and ensure all the right domains were covered by cases. They also shared ways to encourage the creation of relevant case studies. The CTO, as the owner of the project card system, helped us implement this feature effectively and advised us on the realization of expertise coverage.

After interviews with the focus groups, we collected the acquired information and analyzed the proposed ideas, summarizing and categorizing them. We planned features for urgent implementation into production and documented those planned for later implementation. As a result, our project requirements and prioritization changed. We added many essential features thanks to the conducted interviews, including project card integration and assigning lower scores to older case studies.

We also performed UAT, analyzed and categorized feedback from all departments, evaluated the results, assessed user wishes, and planned the development of the most popular features, delivering a product that satisfies all parties.

The BI team, together with marketing and SMEs, finalized the solutions for user and admin dashboards. To calculate the optimal weights, we made PoC where slicers are used to change the weight of the variable formula. Then we added a parameter that takes into account case views by everyone except for the marketing department in the last 3 months and assigned it the weight of 5%. To balance out the formula, we made a slicer for choosing how many of the best-scored cases are included in the industry. After the approval, we finalized the case calculation formula together with the BA.

When having difficulties with developing a specific feature, we held ad-hoc meetings to come to a compromise. For example, we had to think about scoring cases in industries where the number of cases was lower than 10. We discussed the following scenarios for scoring such cases: lower the overall score, and if so, by how much take the missing cases for 0; or lower the score by a fixed amount. We decided to use the following rules: for industries – take the top 10; for technologies and solutions – top 5 each; remove zero values for user viewing and ignore them in calculations.

As the goals and requested features changed during the project, we had to set up the process of working with change requests and new feature implementation such as adding the information box, new buttons and calculations, project card links, etc. The BI team analyzed each new feature’s tech feasibility. If it passed the tech review, the approval of the SME and process owner, and requirements approval, the developers planned its implementation and delivered it to production.

Technologies

Microsoft Azure

BI developers chose Microsoft Azure to develop the data warehouse for the solution and Azure SQL for the database, using the snowflake schema to arrange tables in a multidimensional database.

For the data model, we opted for the Kimball methodology, or the business dimensional lifestyle approach. The team chose it to get the following benefits:

- Speed of development

- Simplified querying and analysis

- Database space-saving that simplifies system management

- Fast data retrieval from the data warehouse

- A small team being sufficient for warehouse management

- Uncomplicated and easy-to-control query optimization

- Better data quality and more reliable insights

- Possibility of building multiple star schemas to meet different reporting needs

The data flow is as follows: CSV files are extracted from the primary data sources (marketing materials library, Google Analytics, etc.) using an ETL tool. The data is then evaluated, loaded into Azure Blob, transformed using Azure Data Factory (ADF), and stored in the DWH.

We loaded data into a denormalized dimensional data warehouse model, dividing data into the fact table, which consists of numeric transactional data, or dimension table, which consists of the reference information that supports facts.

We chose the Star schema that combines a fact table with several dimensional tables and utilizes a bottom-up approach to data warehouse architecture design, with data marts formed depending on business requirements. We chose this schema for its ease of understanding and implementation, as it allows users to find the necessary data easily.

To integrate data within our data warehouse lifecycle we used conformed data dimensions in two ways:

- as a basic dimension table shared across different fact tables (such as customer and product) within a data warehouse

- as the same dimension tables in various Kimball data marts

This way we ensured that a single data item is utilized in a similar manner across all the facts.

The table below details the features, project use, and results of the Microsoft Azure technologies the BI team used:

|

Service |

Features |

Project use |

Results |

|---|---|---|---|

|

Azure Data Factory |

Fully managed serverless ETL service Flexible and scalable Easy to meet performance requirements |

Allowed us to forego using actual servers and subscribe to a pay-as-you-use plan |

Serverless ETL solution with autoscaling and multiple features |

|

Azure SQL Server |

Fully-managed database Automated updates, provisioning, and backups Flexible and responsive Storage rapidly adapting to new requirements Intelligent threat detection |

Common data warehouse for all BI systems, used by other Itransition teams No load on the main OLTP system Complete decoupling of the OLTP and OLAP processing |

High availability Peak performance and durability |

Power BI

The team opted to use Power BI as a business intelligence and data visualization tool for creating dynamic custom dashboards using a variety of different data sources. We selected this suite because it turns unrelated data sources into consistent, reliable, easy-to-digest, and interactive insights for smart decision-making.

Power BI has the following advantages over other solutions on the market:

- Large data storage

- E-mail alerts for metrics tracking

- A centralized view of KPIs

- Intuitive at-a-glance visualizations

- Cloud-based features for collaboration and sharing

- Mobile apps for insights on the go

- Easy data filtering

- Next-level graphics

Our BI developers wrote a script to download our monthly report from Power BI, convert it into a PDF document, and upload it to the marketing materials library. This allowed us to cut Power BI Premium license costs for dozens of report users.

On top of Power BI, we set up Row-Level Security, restricting data row access depending on user roles or execution context. This allowed us to simplify design and security coding.

We also used custom Power Automate from the constructor of Power BI tools to build automated processes using low-code and drag-and-drop features. Calculations were written by translating math formulas into the Power BI DAX language.

BI developers also set up message delivery and notifications using email subscriptions for reports and dashboards in Power BI.

Challenges

We ran into technical challenges developing the tooltip for the top 10 and top 5 cases. DAX has a function that allows users to see the top 10 but with cases that have the same score, the result can be a number higher than 10. So, if there are four equally scored cases, DAX highlights 14 cases, not 10. Thus, we needed to prioritize equally scored case studies to bring the number down to 10. We decided to prioritize the newest cases, using case IDs assigned by the creation date to determine how new they are.

Another technical challenge was the cross-filtering feature, enabling users to use a filter in one table and have other tables filtered automatically. The functionality did not work correctly, deleting the necessary cases or adding irrelevant cases. Developers solved the problem on the DAX level by using a multi-line measure that included several stages of checks and comparisons, i.e. multiple uses of the IF function.

Results

The implementation of the BI solution produced the following results:

- fact-based understanding of expertise coverage and improvement points discovery

- 19% leads number increase thanks to data-driven creation of selling case studies

- 31% rise in the conversion of leads to opportunities

Services

Business intelligence implementation: key steps, team, and tech options

Itransition guides you through the business intelligence implementation process by outlining its key steps, challenges, and common team composition.

Insights

Business intelligence architecture: key components, benefits, and BI team

Discover what business intelligence architecture (BI architecture) is and what components and skills are needed to build a high-performing BI solution.

Case study

BI consulting for a CX company

Learn how Itransition optimized Power BI solution and data model for a CX company, achieving 5x faster incremental data loads and saving subscription costs.

Case study

A dedicated client delivery team for healthcare BI

Learn how we set up a client delivery team to handle change requests, code reviews, and product support, accelerating new feature launches 8 times.

Case study

BI for incident management

Read how Itransition developed a customizable incident analytics BI solution for a global risk management and safety assurance company.

Case study

Cloud business intelligence system for vehicle manufacturers

Find out how Itransition migrated a BI suite to the cloud and delivered brand-new cloud business intelligence tools for the automotive industry.

Insights

Business intelligence architecture: key components, benefits, and BI team

Discover what business intelligence architecture (BI architecture) is and what components and skills are needed to build a high-performing BI solution.

Insights

Business intelligence architecture: key components, benefits, and BI team

Discover what business intelligence architecture (BI architecture) is and what components and skills are needed to build a high-performing BI solution.